Prompting with Active Learning

What is Prompting

Prompting typically refers to providing an initial input or instruction to the model to generate a desired response or continuation. It involves giving the model a specific prompt text to set the context or guide its output. Prompting plays a crucial role in tasks such as question and answering, summarization and semi-structured prompting, with applications in extracting information in 10-Ks, summarizing medical charts, and identifying key clauses in legal contracts. Here are examples of a few prompts that a user can ask an LLM:

Question: What is Apple's revenue growth rate in this 10-K report?

Question: Can you summarize this long medical chart in 3 sentences?

Question: Which clauses in this legal contract seem anomalous?

Answers to these questions can add tremendous business value. While prompting has enabled many of these questions to be answered, leading to tremendous innovations in the field of artificial intelligence, there are limitations.

Model Hallucinations and Errors

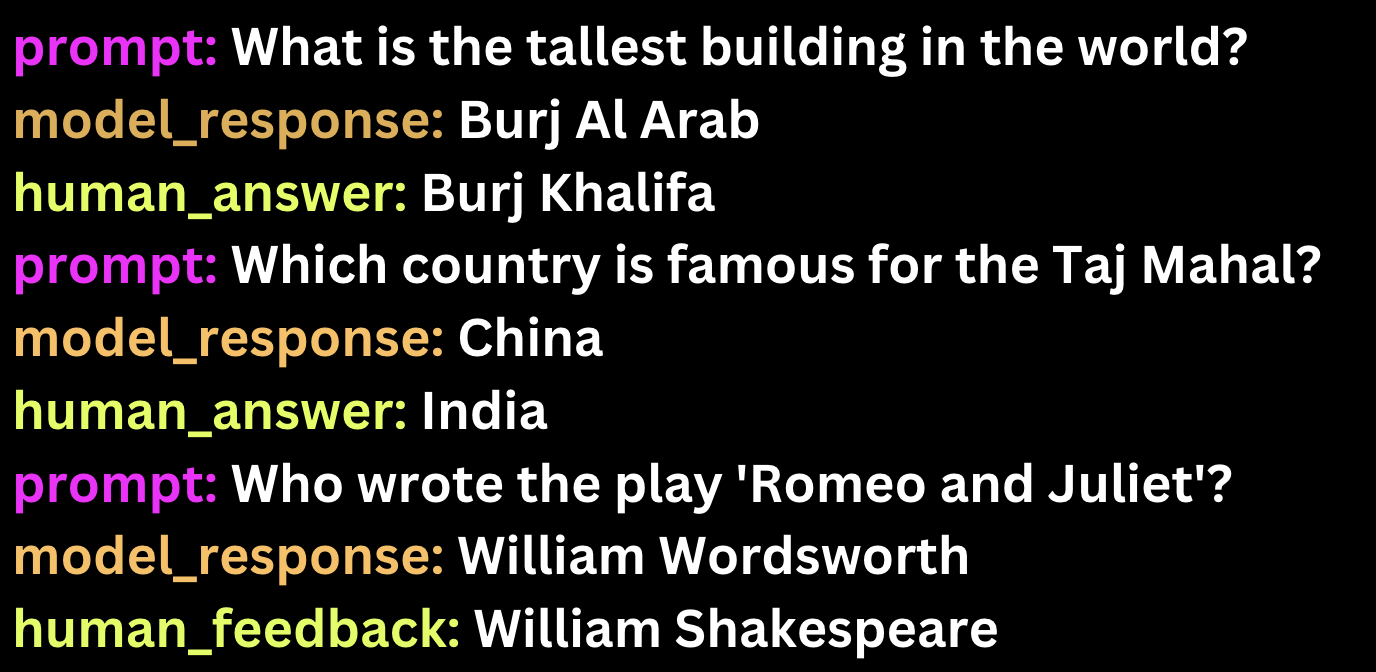

General purpose zero-shot LLMs, which are trained on a wide range of data, can sometimes generate responses that are factually incorrect or nonsensical. These hallucinations occur when the model generates information that is not supported by the training data or lacks factual basis. Here are a few examples of hallucinations:

Question: What happened during the Battle of Waterloo?

Model Response: The Battle of Waterloo was a fierce conflict between the British forces led by General John Smith and the alien invaders from Mars. The brave soldiers fought valiantly against the extraterrestrial creatures, ultimately emerging victorious and saving humanity from enslavement.

In this situation, the model hallucinates by introducing fictional elements into the response. It creates a scenario where the Battle of Waterloo involves a fictional conflict with alien invaders from Mars, deviating from the actual historical events.

Question: What is the recommended treatment for a common cold?

Model Response: For a common cold, it is advisable to consume large quantities of chocolate and engage in vigorous exercise to sweat out the illness. Additionally, singing loudly for an extended period can help expel the cold virus from the body.

In this situation, the model generates a response that offers incorrect and potentially harmful advice for treating a common cold. The suggestion to consume large quantities of chocolate and engage in intense exercise contradicts established medical recommendations, which typically emphasize rest, hydration, and over-the-counter remedies.

The Gap between LLMs and Human Answers

LLMs, including fine-tuned models, are general-purpose language models that do not directly incorporate human feedback during training. This creates a gap between their generated answers and human answers, leading to outputs that may not always align perfectly with human expectations or domain-specific knowledge. This is because LLMs are trained on diverse text but lack the nuanced understanding and contextual knowledge that human experts possess.

Question: What are some effective strategies for improving productivity at work?

LLM Response: To improve productivity, you can try creating a to-do list to minimize distractions.

In this situation, the response suggests a generic strategy for improving productivity that may be applicable in general terms. However, it may not offer industry-specific insights, tailored advice, or nuanced approaches that an expert in organizational psychology or productivity consulting would provide.

Question: What is the best strategy for long-term financial investments?

Model Response: A good strategy for long-term financial investments is to diversify your portfolio across different asset classes such as stocks, bonds, and real estate.

In this situation, the response suggests a generally sensible approach to long-term financial investments by emphasizing diversification and periodic review, it lacks the depth of expertise that a financial advisor or investment professional would provide. These experts consider additional factors such as individual risk profiles, market conditions, and specific investment opportunities that can significantly impact long-term investment success. Human expertise is invaluable in tailoring investment strategies to individual circumstances and providing personalized advice beyond general guidelines.

These examples further exemplify the limitations of LLMs in providing accurate and realistic answers that align with human expertise. While LLMs can generate creative responses, they may lack the practicality, reliability, and depth of knowledge that human professionals possess.

The Need for Active Prompting

Active prompting is crucial in the context of language models to mitigate issues such as model hallucinations and errors that can occur with zero-shot LLMs. While LLMs have shown remarkable capabilities in summarizing text, and answering questions, they are not immune to producing incorrect or misleading information. While traditional approaches to prompting rely solely on asking questions to LLMs, actively learning from human input can significantly enhance the accuracy and efficiency of the prompting process. Via active prompting, we are able to incorporate human feedback into these LLMs. This enables these general purpose LLMs to be directly applicable to specific, tailored domains of human interest, while mitigating the hallucinations that LLMs make.

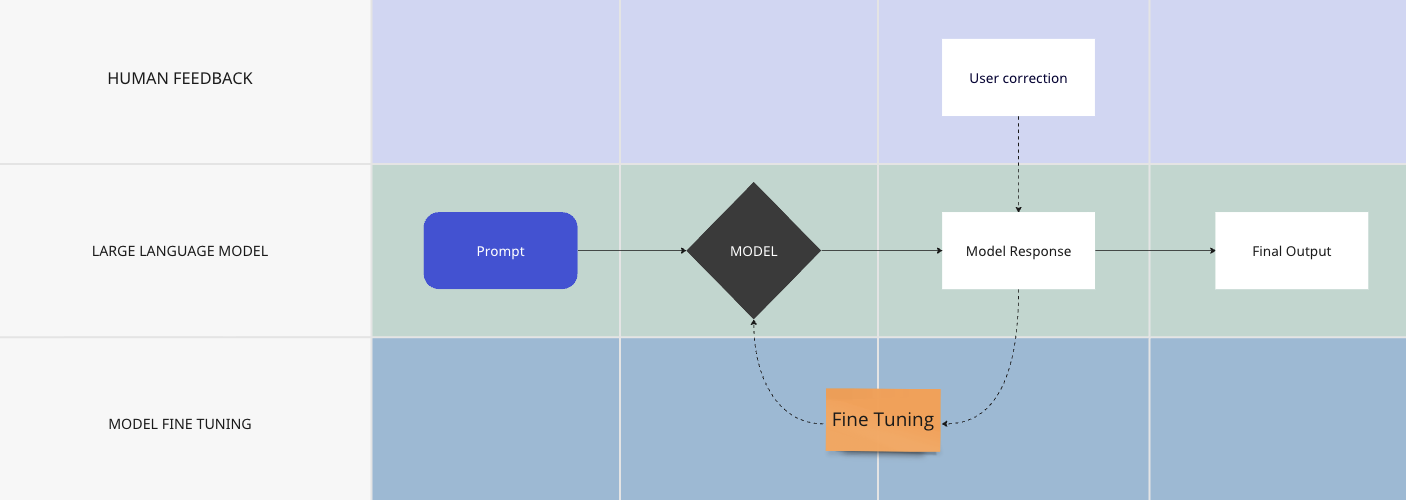

In the context of language models like Anote, active prompting involves guiding the model's responses by allowing users to modify the result from LLMs. We start with the output from the LLM on the given query, have the human to be able to change the result. When the human changes the result, the model is able to learn based on the difference between its predicted output and the actual output from the human. By providing this structure, the model can learn from the user to become more domain specific over time. The user can guide the model's attention towards the specific areas of interest, and in turn receive more relevant and accurate responses. This can be very useful when there are thousands to millions of rows of text data that users are prompting models over, where we can learn from human feedback on a few datapoints to ensure that the models predictions align with domain specific expectations.

Active Prompting Process Overview

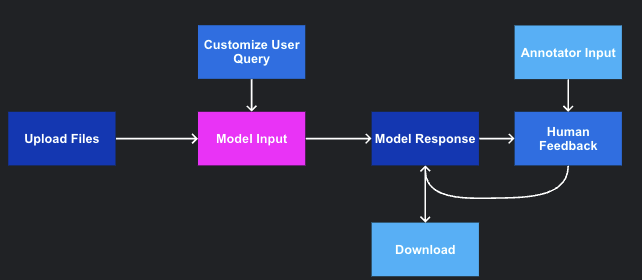

On Anote, the active prompting process typically involves the following steps:

Upload Files

Users can upload text data, in the format of PDFs, DOCX files, TXT files, CSVs, Websites, Powerpoints or Spreadsheets.

Customize User Query

Users define a prompt. Users interact with the model by inputting their prompts or questions to the model. The uploaded files and the user query go into the model input. There are different types of prompts that a user can input:

Unstructured Prompts

These can be prompts with freeform answers in text format. Summarization and Question and Answering are two of the most common types of unstructured prompts. Here are two examples of unstructured prompts would be:

Question: Please summarize the main findings of these documents

Question: What are the primary risks and uncertainties the company faces? How might they impact its future performance?

Information Extraction Prompts

These can be prompts with freeform answers in text format, looking to find entities within text. Two examples of information extraction prompts would be:

Question: Find all instances of company names and emails in a website?

Question: Return in JSON all diseases found in this medical report?

Structured Prompts

These can be prompts with a standardized template that specifies the desired format or structure for the input. Two examples of structured prompts would be:

Question: Which of the following countries is not a member of the European Union?

Question: Given this resume, should I hire this candidate?

Semi Structured Prompts

These are combinations of structured, unstructured and information extraction prompts, where a user can ask multiple questions on a given document, with any type of output format.

Question: Summarize the main principles of Newton's laws of motion.

Question: Which of the following is not a primary color?

Model Response

The model then generates responses based on its understanding of the prompt and the available information. We start with generative AI models that have strong language modeling capabilities, such as GPT-3, Bard or Claude. These models can generate high-quality text based on prompts and exhibit understanding of context and semantics, though they are not by any means perfect.

Model Response: Newton's laws of motion describe the relationship between an object's motion and the forces acting upon it. They include the concepts of inertia, force, and action-reaction.

Model Response: The color that is not a primary color is D. Green.

Human Feedback and Iteration:

Users provide feedback on the generated responses, identifying any inaccuracies or areas for improvement. This feedback is crucial in refining the model's understanding and aligning its responses with user expectations. Users can iterate this process by modifying the prompts or providing additional feedback to enhance the model's performance.

Question: Summarize the main principles of Newton's laws of motion.

Model Response (Initial): Newton's laws of motion describe the relationship between an object's motion and the forces acting upon it. They include the concepts of inertia, force, and action-reaction.

User Feedback: The initial response is accurate but lacks specific details about each law. It would be helpful to provide a brief explanation of each law to offer a more comprehensive summary.

Updated Prompt: Summarize the main principles of Newton's laws of motion, including the laws of inertia, force, and action-reaction.

Model Response (Improved): Newton's laws of motion are fundamental principles in classical physics. The first law, known as the law of inertia, states that an object at rest tends to stay at rest, while an object in motion tends to stay in motion unless acted upon by an external force. The second law relates the force applied to an object to its mass and acceleration. Finally, the third law states that every action has an equal and opposite reaction. These laws provide a framework for understanding the relationship between motion and the forces acting on objects.

In this example, the user provides feedback on the initial response, highlighting the need for more specific details. The prompt is then revised to include the specific laws to be summarized. As a result, the model generates an improved response that offers a more comprehensive summary of Newton's laws of motion, addressing the user's feedback.

Question: Which of the following is not a primary color?

Model Response (Initial): The color that is not a primary color is D. Green.

User Feedback: While the initial response is correct, it would be helpful to provide a brief explanation of primary colors to provide more context and educational value to the users.

Updated Prompt: Which of the following colors is not considered a primary color? Select the letter corresponding to the correct choice.

Model Response (Improved): The color that is not considered a primary color is D. Green. Primary colors are the basic colors that cannot be created by mixing other colors, and they are used to create all other colors through combinations.

In this example, the user provides feedback on the initial response, suggesting the inclusion of an explanation about primary colors to provide more educational value. The prompt is then revised to include a brief context about primary colors and their significance. As a result, the model generates an improved response that not only provides the correct answer but also offers a concise explanation of primary colors, addressing the user's feedback.

User Feedback Loop

At Anote, we prioritize accuracy by utilizing human feedback to refine our large language model. When you prompt the model, it generates an answer based on its capabilities. However, if the generated answer is incorrect or inaccurate, you have the opportunity to modify it based on your own expertise. Incorporating user feedback into the training process allows the model to learn from its mistakes and improve its understanding of questions and answers. As you continue to provide corrections, the model adapts and adjusts its responses accordingly. This iterative feedback loop refines the model's responses and drives continuous improvement, resulting in higher accuracy over time. Our goal is to reach a point where the model consistently delivers responses that match your expectations, ultimately boosting its performance and ensuring a higher level of accuracy. To account for user feedback, we currently take 3 approaches:

Few-Shot Prompting

This technique involves providing additional prompts or examples to the model, allowing it to learn from a small number of labeled instances and generalize to similar cases. You can read more about this in the Few Shot Prompting Section.

By exposing the model to more diverse examples, certain LLMs can better understand and generate accurate responses.

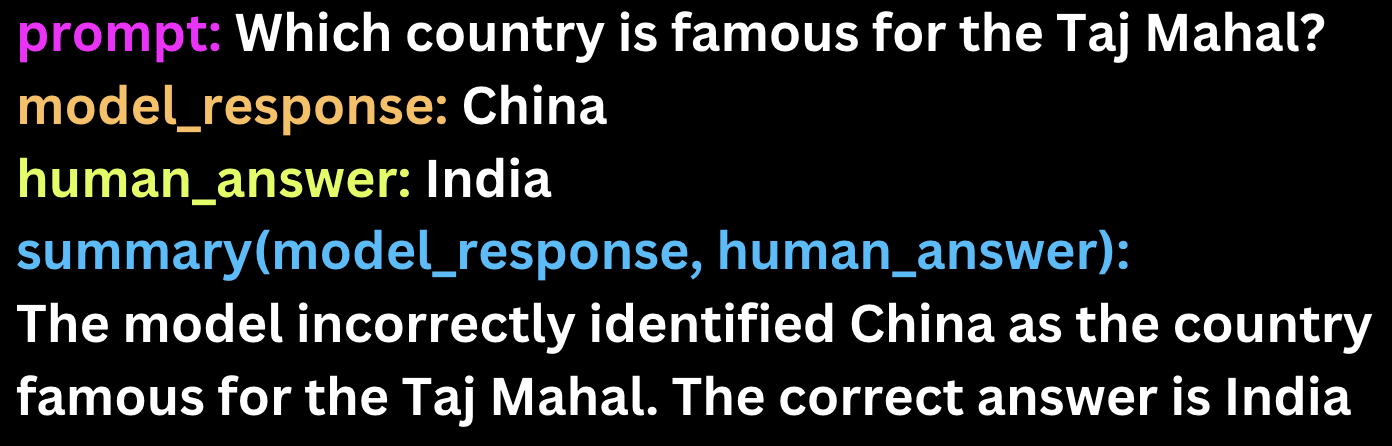

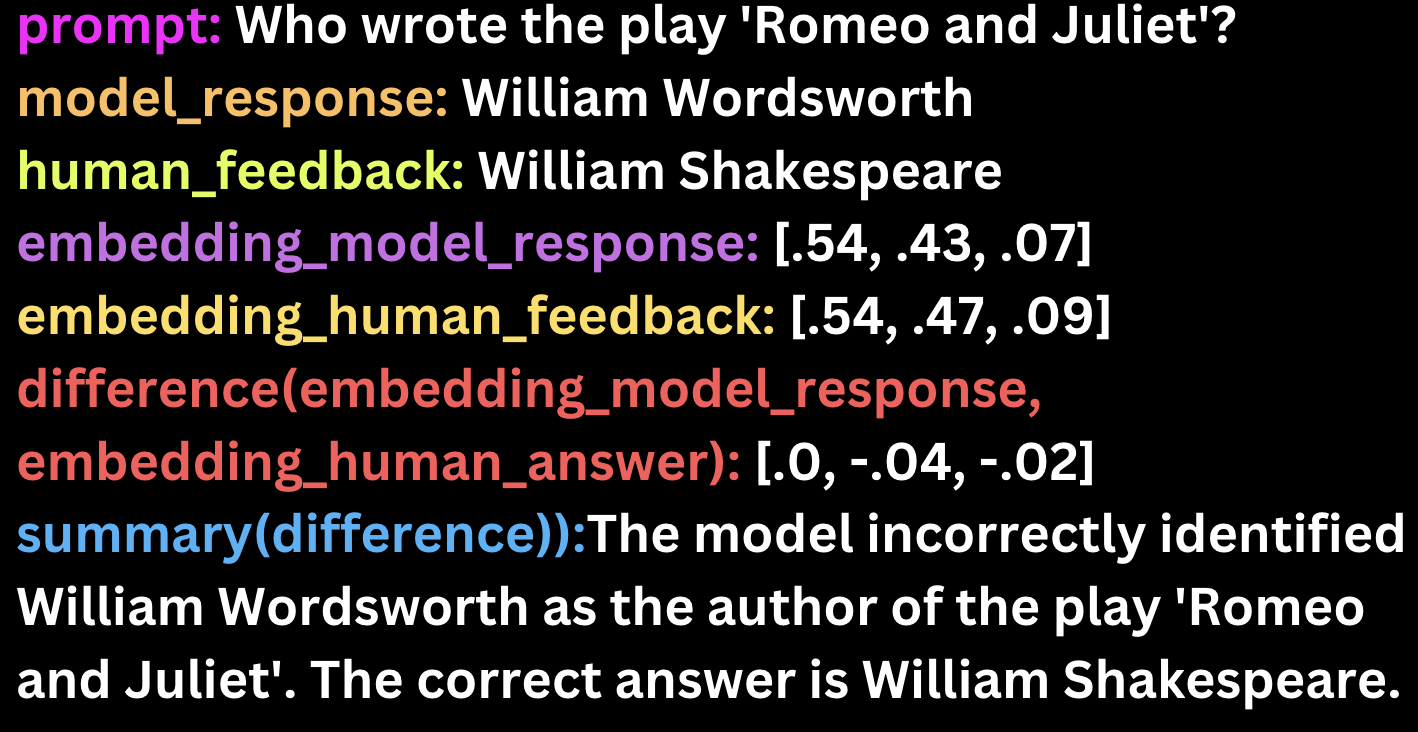

Summary of Differences

This technique focuses on summarizing the discrepancies between the model's predicted answer and the human feedback. By highlighting the differences, it provides valuable insights into areas where the model needs improvement. Analyzing these differences helps in fine-tuning the model and addressing specific shortcomings.

By summarizing the differences between model responses and human input, we can obtain more tailored, domain specific prompts on the rest of our data with a shorter context window, saving a lot of time and money.

Embedding Research

This technique utilizes advanced embedding techniques to represent the predicted and actual answers as numerical vectors. By comparing the embeddings, we can quantify the differences between the model's response and the human feedback. This information is then fed into the model to enhance its understanding and alignment with human expectations.

By summarizing the differences between model responses and human input in the embedding space, we can obtain even better and more tailored responses, while taking up less space, which saves a lot of time and money.

Why Human Feedback

By actively prompting the model and incorporating user feedback, the performance and accuracy of language models can be significantly improved. This iterative approach ensures that the model learns from user interactions and continuously adapts to provide more accurate and relevant responses over time.

Download Results

When finished incorporating human feedback, the user can export their data as a CSV, and download the results. These results will be tailored to the user, and are more domain specific then general purpose results.

Summary

Active prompting empowers the model to learn from human feedback, producing high-quality results from LLMs. These results have less hallucinations and higher accuracies than zero shot models, and are more tailored and domain specific than general models. Active prompting gives users more control and influence over the output of language models, making it a valuable technique for various applications such as question-answering, content generation, and document analysis. In the following pages, we will explore how Anote utilizes active prompting in three important areas: Summarization, Question and Answering, and Semi-Structured Questions. By examining these examples, we will understand how active prompting enhances user interaction and maximizes the capabilities of language models.