Limitations of Few Shot Learning

Pushing the Boundaries of Few-Shot Learning

Few-shot learning has opened up exciting possibilities in natural language processing, enabling us to achieve remarkable results with limited labeled data. However, we have only scratched the surface of what is possible with this approach. In order to fully leverage the potential of few-shot learning, we need to address its limitations and explore advanced techniques that push the boundaries of this field.

Limitations of Few-Shot Learning for Classification

While few-shot learning has shown great promise, it also faces certain limitations that need to be addressed:

-

Sensitive to Noisiness: Few-shot learning models, such as Siamese Networks, can be sensitive to noisy or ambiguous data. This sensitivity can lead to potential inaccuracies in classification tasks and affect the overall performance of the model.

-

Handling Imbalanced Data Distributions: Imbalanced data distributions, where one class has significantly more examples than others, pose a challenge for few-shot learning. These imbalances can impact the model's ability to generalize and make accurate predictions, particularly for underrepresented classes.

-

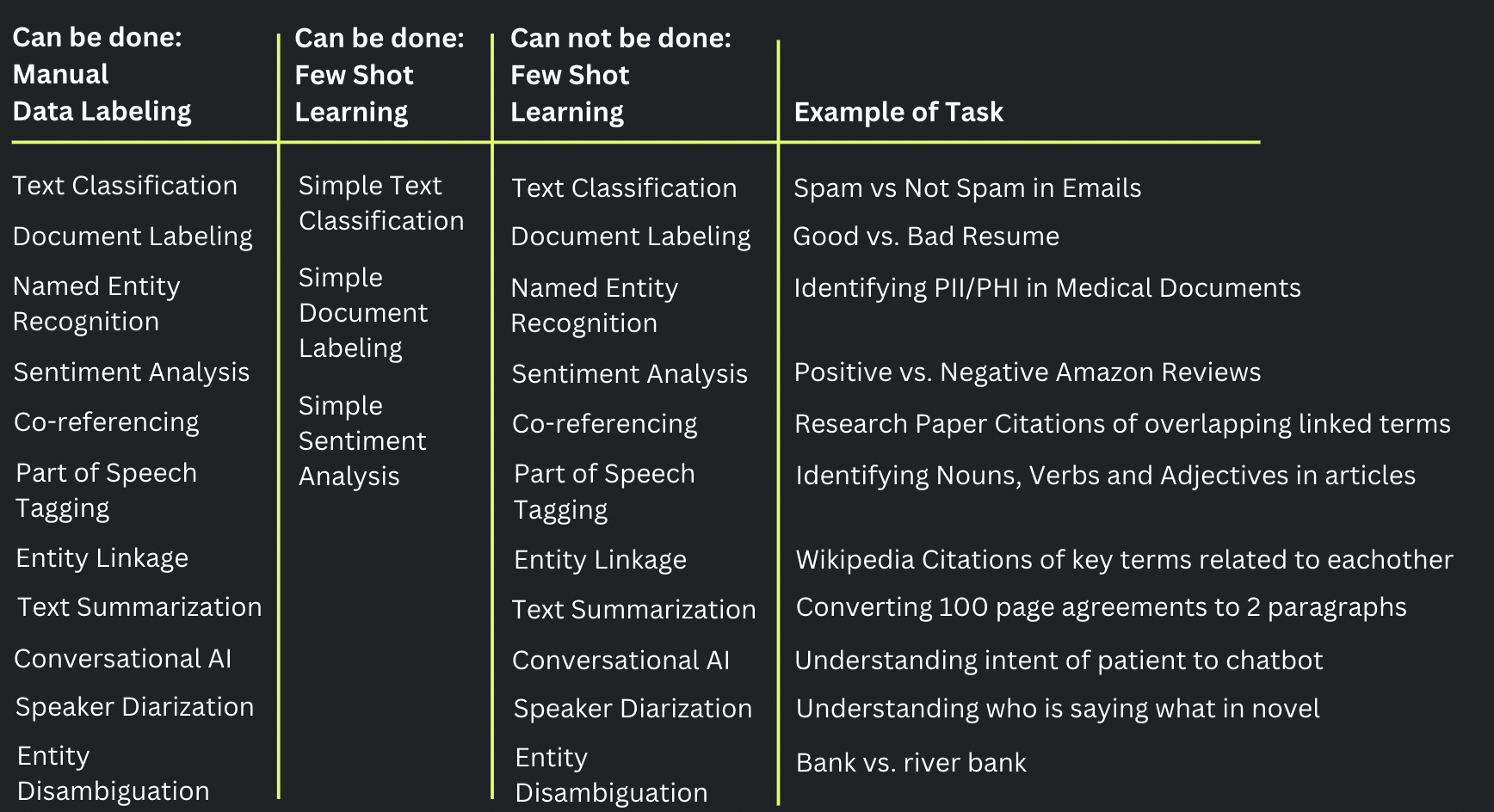

Limited Applicability: While few-shot learning techniques, like Siamese Networks, work well for simple text classification and sentiment analysis tasks, they may not be as effective for more complex tasks such as named entity recognition. These tasks often require detailed information extraction, which goes beyond the capabilities of traditional few-shot learning approaches.

To overcome these limitations, researchers and practitioners are actively working on developing innovative solutions and advancing the field of few-shot learning.

Limitations of Few-Shot Learning for Prompting

One area of focus for pushing the boundaries of few-shot learning is the development of smarter and more effective prompting techniques. Given the context window and token length restrictions imposed by language models, it is essential to explore approaches that optimize the use of prompts for better performance. Here are some notable advancements in few-shot prompting techniques:

Adaptive Prompting Strategies

Adaptive prompting strategies aim to dynamically adjust the prompts based on the input data and task requirements. These strategies involve techniques such as prompt engineering, contextual rewriting, or learned prompt generation. By tailoring the prompts to the specific context and task, adaptive prompting strategies enhance the model's ability to capture relevant information and improve prediction accuracy.

Prompt Compression and Summarization

Prompt compression and summarization techniques focus on condensing prompts while preserving their essential information. These techniques leverage methods like information retrieval, keyphrase extraction, or summarization algorithms to reduce the prompt length without sacrificing its meaning. By maximizing the efficiency of prompts within the limited token constraints, prompt compression and summarization techniques enhance the overall effectiveness of few-shot learning models.

Contextual Prompting

Contextual prompting techniques leverage the contextual information surrounding prompts to enhance their impact. These techniques consider the broader context of the document or conversation, utilize contextual embeddings, or leverage context-aware models. By incorporating contextual cues into the prompts, contextual prompting techniques enable the model to better comprehend the task and generate accurate responses.

Unlocking the Full Potential

As we continue to advance the field of few-shot learning and develop innovative prompting techniques, we unlock the full potential of this approach. Overcoming the limitations of few-shot learning and optimizing prompt utilization will lead to more accurate and robust results in various natural language processing tasks.

The ongoing research and development in this area hold great promise for pushing the boundaries of what is possible with few-shot learning. With smarter and more efficient approaches to prompting, we can harness the power of limited labeled data and bring about transformative advancements in natural language processing.