Few Shot NER

Few Shot Named Entity Recognition

Named Entity Recognition (NER) is a crucial task in Natural Language Processing (NLP) that involves identifying and classifying named entities within text. Incorporating few-shot learning techniques into Named Entity Recognition (NER) tasks has revolutionized the efficiency of training models with limited labeled data.

Utilizing FSNER for Named Entity Recognition

FSNER is a pre-trained NER model based on BERT architecture. It can identify entities belonging to different categories, such as restaurants and languages, among others. With FSNER, we can extract named entities from text efficiently and accurately. Let's dive into an example to illustrate how FSNER works.

First, we import the necessary libraries and initialize the FSNER tokenizer and model:

import json

from fsner import FSNERModel, FSNERTokenizerUtils, pretty_embed

tokenizer = FSNERTokenizerUtils("sayef/fsner-bert-base-uncased")

model = FSNERModel("sayef/fsner-bert-base-uncased")

Next, we prepare the input data, including query texts and supporting texts. The supporting texts contain example sentences that encompass entities of interest, encapsulated within [E] and [/E] tags:

query_texts = [

"Does Luke's serve lunch?",

"Chang does not speak Taiwanese very well.",

"I like Berlin."

]

support_texts = {

"Restaurant": [

"What time does [E] Subway [/E] open for breakfast?",

"Is there a [E] China Garden [/E] restaurant in Newark?",

"Does [E] Le Cirque [/E] have valet parking?",

"Is there a [E] McDonalds [/E] on Main Street?",

"Does [E] Mike's Diner [/E] offer huge portions and outdoor dining?"

],

"Language": [

"Although I understood no [E] French [/E] in those days, I was prepared to spend the whole day with Chien-chien.",

"Like what the hell's that called in [E] English [/E]? I have to register to be here since I'm a foreigner.",

"So, I'm also working on an [E] English [/E] degree because that's my real interest.",

"Al-Jazeera TV station, established in November 1996 in Qatar, is an [E] Arabic-language [/E] news TV station broadcasting global news and reports nonstop.",

"They think it's far better for their children to be here improving their [E] English [/E] than sitting at home in front of a TV.",

"The only solution seemed to be to have her learn [E] French [/E].",

"I have to read sixty pages of [E] Russian [/E] today."

]

}

We tokenize the queries and supports using the FSNER tokenizer. Then, we leverage the FSNER model to predict the start and end positions of the named entities in the queries:

queries = tokenizer.tokenize(query_texts).to(device)

supports = tokenizer.tokenize(list(support_texts.values())).to(device)

p_starts, p_ends = model.predict(queries, supports)

output = tokenizer.extract_entity_from_scores(query_texts, queries, p_starts, p_ends,

entity_keys=list(support_texts.keys()), thresh=0.50)

print(json.dumps(output, indent=2))

pretty_embed(query_texts, output, list(support_texts.keys()))

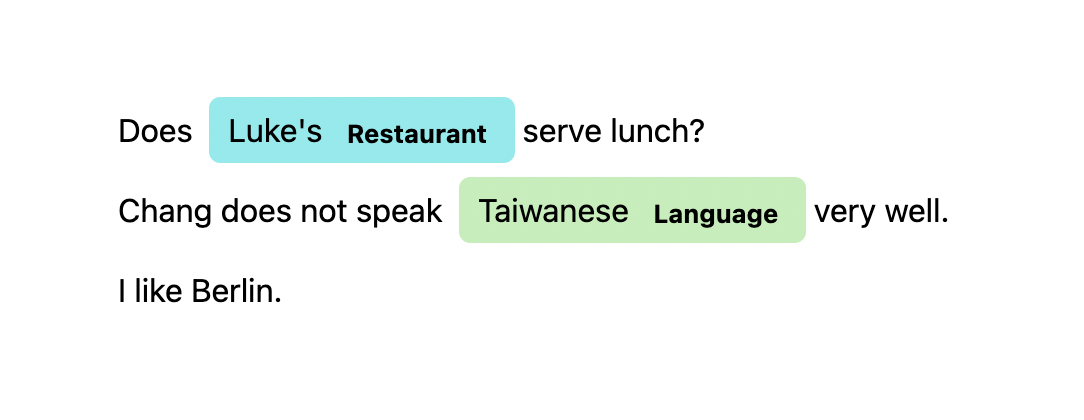

The output variable contains the extracted named entities from the queries, organized by their respective entity categories. The pretty_embed function displays the original query texts with highlighted named entities for visual clarity.

Summary

By utilizing FSNER, we can efficiently extract named entities from text, improving information extraction and analysis in various applications. We demonstrated how to tokenize queries and supports, predict entity positions, and extract named entities using FSNER. The iterative feedback loop with human input allows continuous improvement and refinement of the model's accuracy in identifying named entities within text.