Classifying Dangerous Social Media Posts

Sector: Non Profit

Capability: Text Classification

Deborah works at a non-profit organization that aims to analyze social media data from Reddit for public safety purposes in New York City. Deborah's objective was to build a model capable of scraping Reddit data to determine if a specific post in the New York City area contained dangerous content. To achieve this, annotated data was required to train their AI model.

Deborah allocated approximately $50,000 to a Manual Data Labeling Company to perform the manual annotations. The process took around four months due to the need for subject matter expertise. However, when Deborah received the labeled data back, she discovered numerous issues with the initial labels. Many of the labels were incorrect and some were even outrageous, rendering the data insufficiently labeled to train an AI model effectively. Deborah considered introducing a new class, "semi-dangerous," but realized that manually relabeling all the existing data rows would be a time-consuming task for the labeling team.

The challenges of manual data labeling, including cost, time, expertise, accuracy, and changing requirements, became apparent in this project. Unfortunately, due to these difficulties, Deborah's AI project ultimately failed. Let's see if we can use Anote to make this project successful.

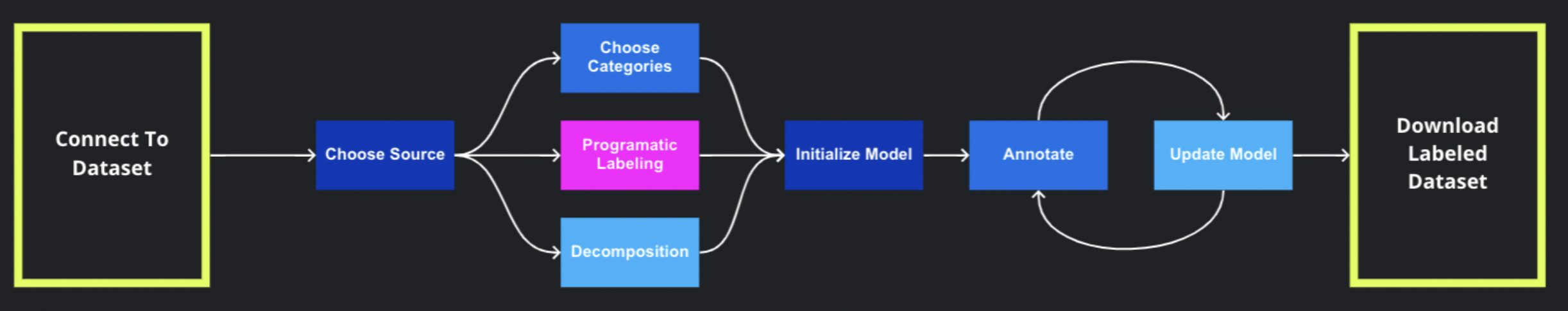

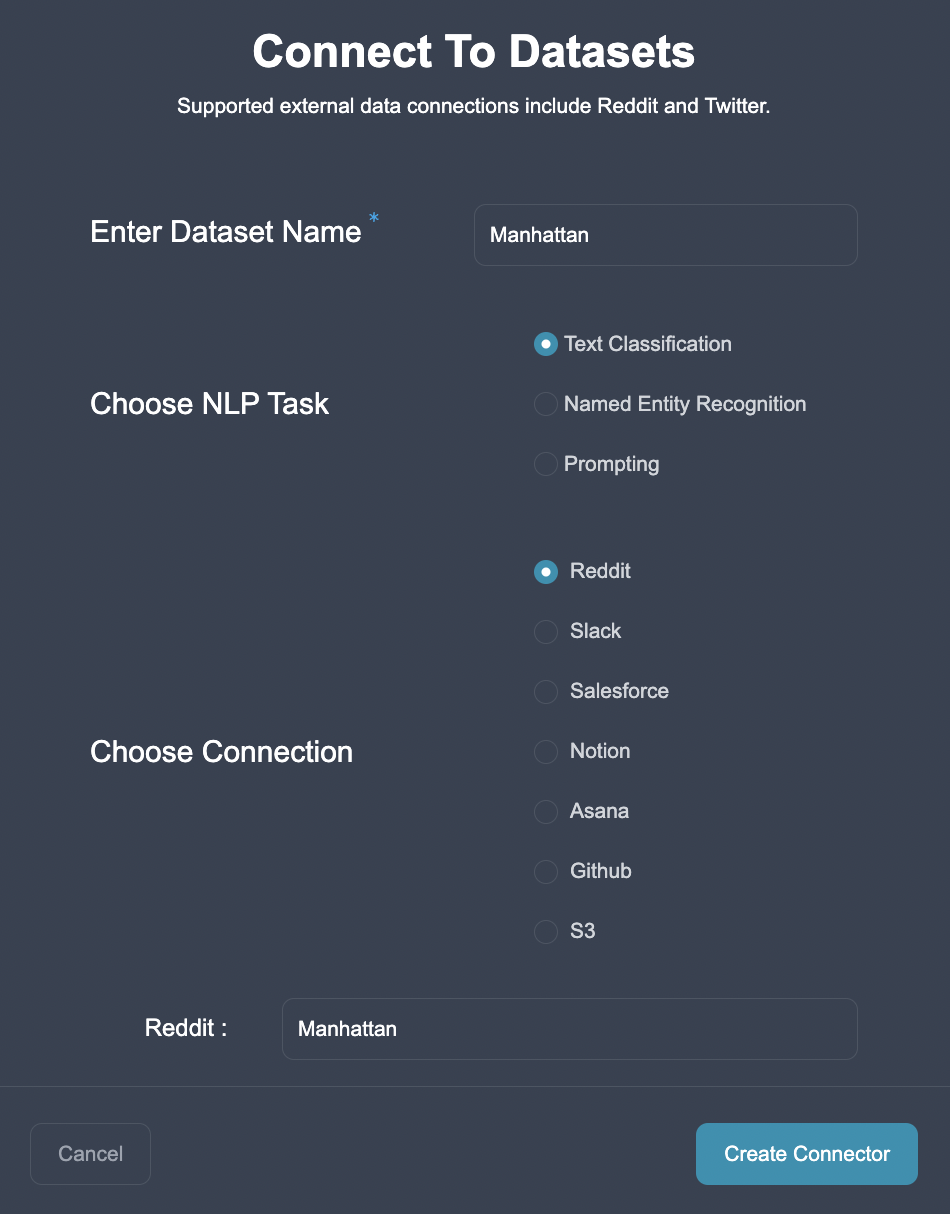

Upload Data

Start by uploading the case studies in the Connect to Datasets format, choose the NLP task of Text Classification, and choose the reddit connector. Edit the thread Manhattan since we will be obtaining reddit posts from New York City.

Customize Questions

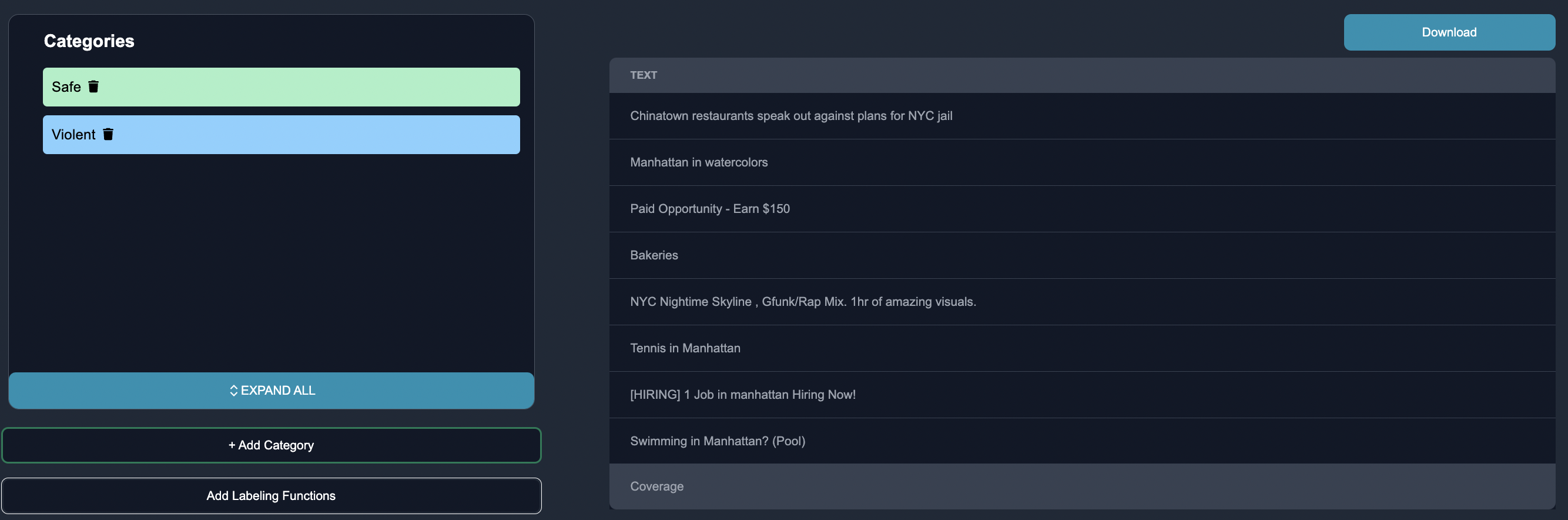

Input the relevant categories for the reddit posts.

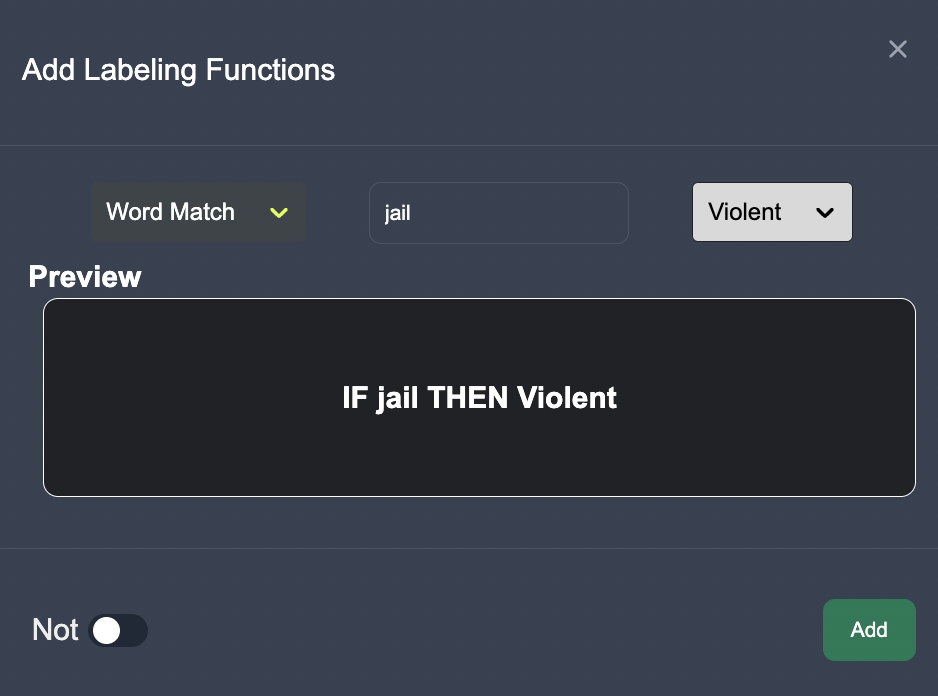

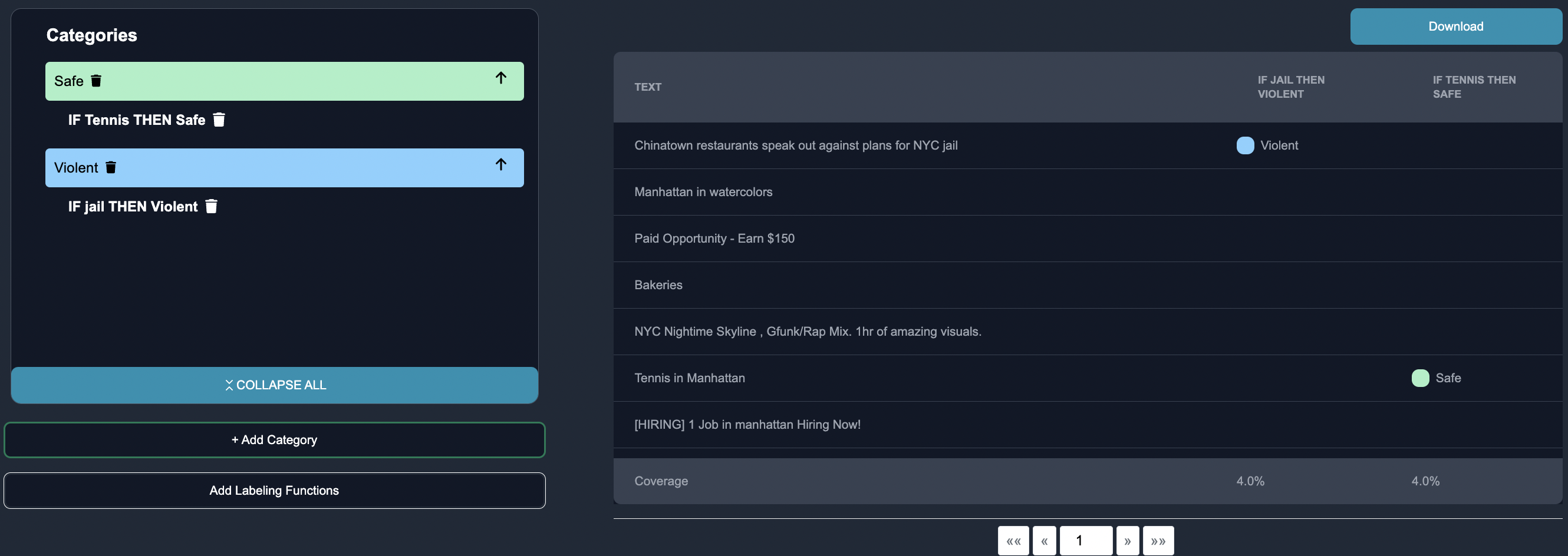

We can add labeling functions to highlight keywords and entities associated with specific categories.

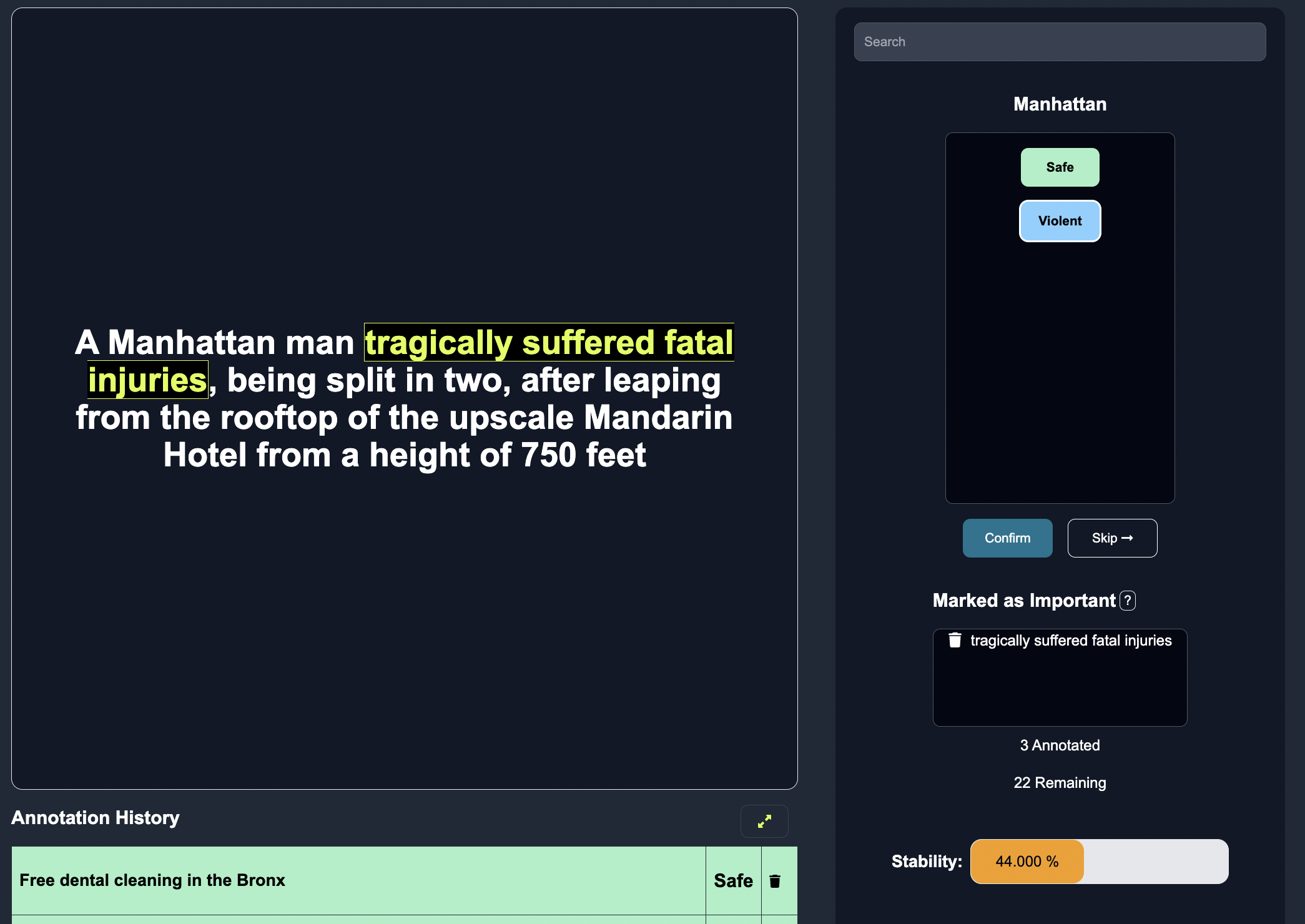

Annotate

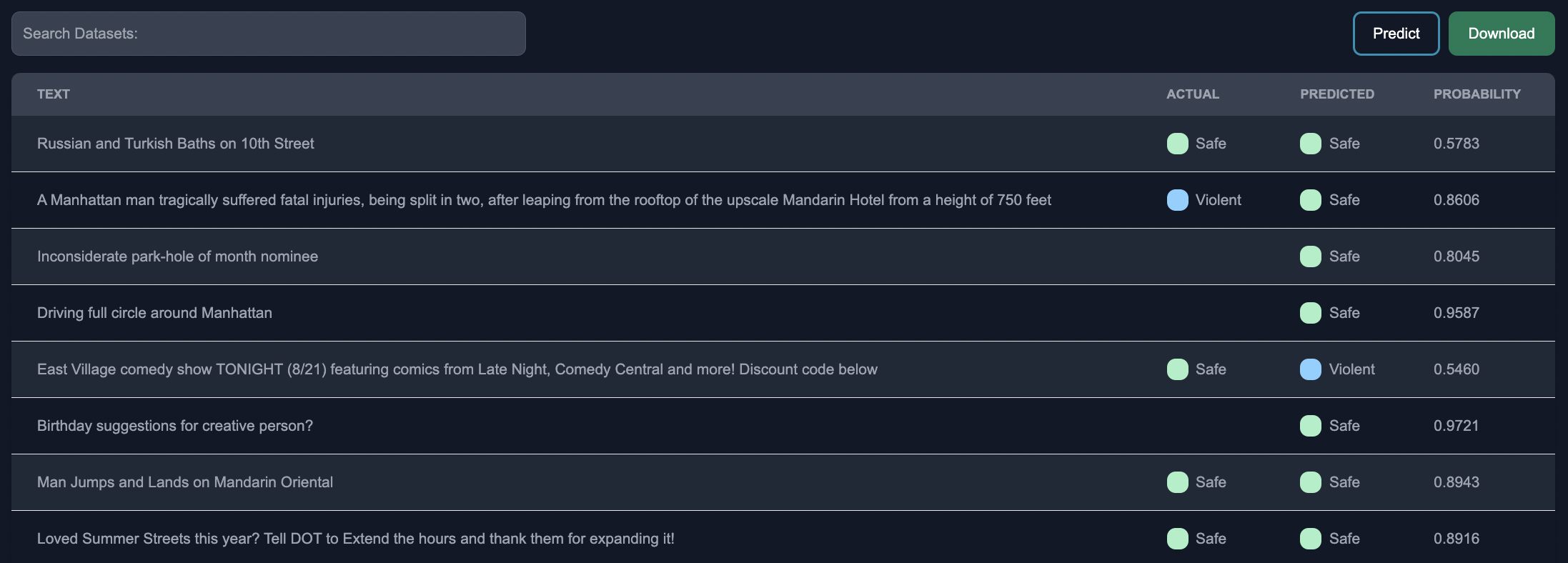

Insert human feedback via the correct categories on a few edge cases. As we annotate more posts, the model is able to learn better predictions over time.

Download Results

When finished annotating, you can download the results from the reddit posts. We are now able to determine whether a specific post contains dangerous content in New York City.